Text to Blockbuster

How SORA will change the face of Advertising, Media and Business

Welcome to the Shep Report, where I help Business Leaders like yourself stay on top of the emerging technology landscape and how it will impact your business.

Today I'm looking at Open AI's SORA. A text-to-video generation tool that could have as big an impact as the introduction of ChatGPT itself.

For your viewing pleasure, I’ve put together a short 5 min video (Please Like & Subscribe to my new Shep Report YouTube Channel) or you can enjoy the article below.

What is it?

SORA was announced just over a month ago along with a set of example videos that showed a serious leap beyond anything else we've seen in the current generation of text-to-video tools like RunwayML, Fliki and Pika.

In essence, SORA turns written prompts into detailed, high-quality videos.

These videos can be up to a minute long and maintain visual quality with relatively little hallucination. Existing images or videos can also be provided that SORA can modify based on the prompts, potentially breathing new life into product catalogs and advertising assets.

Complex, photorealistic, creative scenes with multiple characters, specific types of motion, and accurate details of the subject and background can be generated in minutes.

PROMPT: "The camera directly faces colorful buildings in Burano Italy. An adorable dalmatian looks through a window on a building on the ground floor. Many people are walking and cycling along the canal streets in front of the buildings."

What's truly amazing about SORA is that the model understands not only WHAT the user has asked for in the prompt. But also HOW those things exist in the physical world.

This means that when a user inputs a description, SORA can identify the key elements and themes within that prompt. For instance, if a user describes a "sunset over a bustling cityscape," SORA recognizes the primary subjects—the sunset and the cityscape—as well as the implied atmosphere of activity and transition from day to night. This level of content comprehension is achieved through SORA's training on a vast dataset of videos and associated textual descriptions.

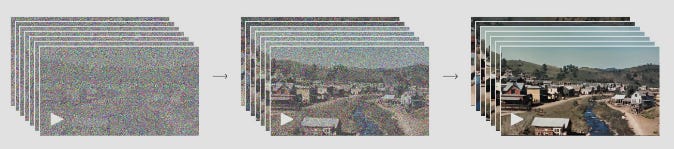

Examples of improvements in fidelity that scale as training compute increases.

By analyzing these pairs, SORA learns to correlate specific words and phrases with visual patterns, enabling it to generate relevant and detailed scenes based on textual prompts.

Prompt: The camera follows behind a white vintage SUV with a black roof rack as it speeds up a steep dirt road surrounded by pine trees on a steep mountain slope, dust kicks up from it’s tires, the sunlight shines on the SUV as it speeds along the dirt road, casting a warm glow over the scene. The dirt road curves gently into the distance, with no other cars or vehicles in sight. The trees on either side of the road are redwoods, with patches of greenery scattered throughout. The car is seen from the rear following the curve with ease, making it seem as if it is on a rugged drive through the rugged terrain. The dirt road itself is surrounded by steep hills and mountains, with a clear blue sky above with wispy clouds.

More impressively, SORA's understanding of HOW those elements exist in the physical world adds a layer of realism. This involves not just recognizing objects and settings, but understanding their interactions, physical properties, and behaviors in real-world contexts.

For example, SORA knows that buildings in a cityscape are static, while cars and pedestrians move; it understands the natural progression of colors in a sunset, transitioning from bright yellows to deep purples. This deep understanding is rooted in SORA's diffusion model architecture and its training process, which includes not only static images but also dynamic video content. This exposure to motion and change over time allows SORA to simulate realistic physics and cause-and-effect relationships within its generated videos, even if it occasionally struggles with complex physics simulations.

Prompt: A stylish woman walks down a Tokyo street filled with warm glowing neon and animated city signage. She wears a black leather jacket, a long red dress, and black boots, and carries a black purse. She wears sunglasses and red lipstick. She walks confidently and casually. The street is damp and reflective, creating a mirror effect of the colorful lights. Many pedestrians walk about.

SORA can not only take textual descriptions as input but given an existing image or video, SORA can extrapolate forward and backwards in time and create multiple camera angles all while maintaining consistency of the characters and environment.

How does it work?

Without diving too deep into the technical jargon:

Imagine you're telling a story to an artist, describing a scene with as much detail as possible. In the case of SORA, you're providing a text prompt instead of speaking out loud. This text could describe anything from a bustling cityscape at sunset to a serene beach scene.

Once SORA receives your text prompt, it begins to interpret and understand the content, similar to how a filmmaker reads a script before shooting a movie. It doesn't just look at the words individually but tries to grasp the overall narrative and the specifics—what's happening, who's involved, and where it's taking place. This understanding is crucial for generating a video that matches your description

Think of SORA as a director breaking down a script into shots and scenes. It uses something called a diffusion model, which starts with a sort of digital "static" or noise. Imagine a blank canvas that's not entirely blank but filled with random colors and shapes. Over time, SORA refines this chaos into a coherent scene, much like an artist painting over a rough sketch, gradually adding details until the picture comes to life.

The process of turning the initial "static" image into a video involves a series of steps where SORA adds and refines details. It's akin to sketching, outlining, and then painting, but for each frame in a video. This is where SORA's understanding of the physical world comes into play. It knows how objects and people should move and interact, ensuring that the resulting video feels realistic. For instance, if your prompt includes a person walking down the street, SORA ensures they move naturally and that their surroundings change appropriately.

After going through the refining process, SORA produces a video that matches your description. This video isn't just a series of disconnected images; it's a smooth, coherent sequence that tells a story or depicts a scene as you envisioned. The technology ensures that characters, objects, and backgrounds are consistent throughout the video, maintaining the illusion of reality

Before SORA's videos reach the viewer, they undergo a series of safety checks. This is similar to a film going through a review process to ensure it meets certain standards and doesn't contain harmful content. OpenAI has implemented measures to detect and prevent the generation of misleading or inappropriate videos, ensuring that SORA's capabilities are used responsibly. This is the stage that SORA currently finds itself. It is still being refined, with plans to launch publicly later this year at a cost that would be in line with OpenAI's other offerings.

How will SORA change how you do business?

In the world of Entertainment and Media, SORA is shaping up to be quite the tool for creatives, making it easier to whip up visual concepts, animations, and special effects. This could mean more room for creativity and trying out new ideas with massive reductions in time and budget constraints.

Thinking about Marketing and Advertising, SORA is setting the stage for ads that really speak to you. Imagine video campaigns that feel like they were made just for you, thanks to the magic of AI. This could mean advertisers can quickly see what works and what doesn't, making sure messages are on point. And slipping a product into any video scene? That's becoming surprisingly straightforward.

Heading over to Training, Education, and Human Resources, SORA has the potential to change how we learn and onboard. Picture interactive training materials and e-learning modules that can be whipped up in no time, all tailored to the specifics of your role. HR could use SORA to create videos that truly show off what makes their company a great place to work.

Corporate Communications could also get a makeover, turning internal updates into engaging video stories. At a minimum it would make company news a bit more interesting.

For those in Product Development, imagine being able to visualize prototypes with stakeholders and get immediate feedback, making it quicker to refine and improve.

In Customer Service, SORA could help create clear, engaging instructional videos. This means better help for customers and a smoother experience when they need support.

And it's not just about business. SORA will be landing in the hands of everyday folks, too. We could be looking at a new wave of content creation, where making everything from fun memes to movie-quality videos is easier for everyone. Just yesterday the first set of videos created by a handful of lucky creatives hit the internet. Be sure to check out the lovely, whimsical short, “Air Head” by shy kids.

This technology will be in the hands of billions of consumers. We might be on the cusp of a whole new revolution in terms of content creation and engagement at a scale that could eclipse YouTube, Netflix, or Hollywood.

I hope you found this helpful and informative. If you are a business leader who wants to stay on top of what will be an ongoing period of great change and disruption, I would welcome being your guide.