One Day Project - From Crazy Idea to Viral Video using Gen AI Tools.

Experiments in Claude, Midjourney, Runway, and Elevenlabs

As a child I was obsessed with Monty Python, Vic Reeves, and a host of British sketch comedians who brought their surrealism and quirky low-budget concepts to our four channels ’ worth of TV screens. I’ve always harbored a secret desire to do something similar, and as someone who's fascinated by the intersection of technology and creativity, I recently challenged myself to create a comedy viral-worthy video using nothing but AI tools - and to do it all in less than a day, on a shoestring budget.

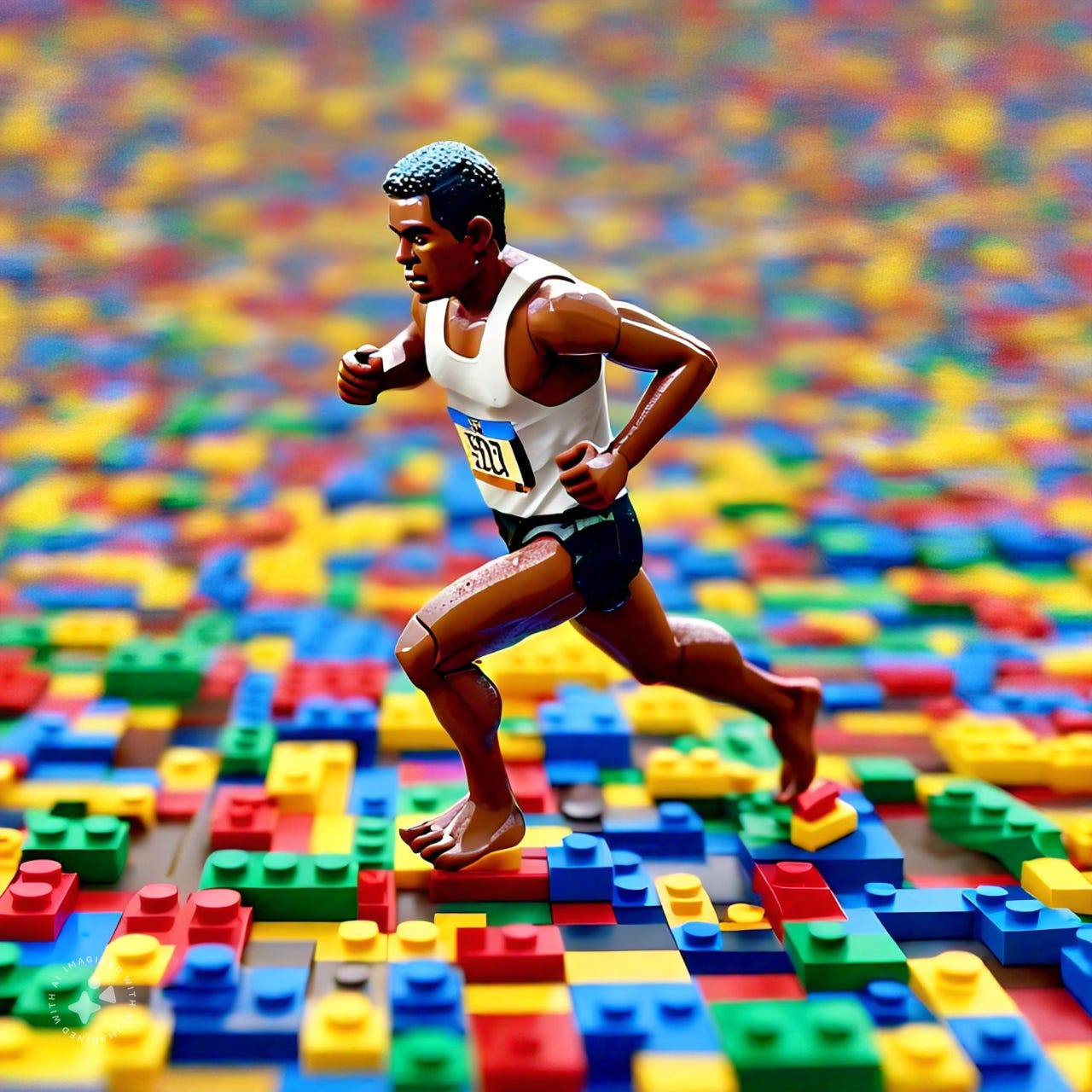

The result? Below. A parody of Olympic coverage featuring athletes running barefoot over LEGO bricks. Something as a father of two young children resonated viscerally.

The lesser known Barefoot Over Lego 100m Dash, Live from Paris 2024 Olympics. (Created using Midjourney, Runway and Eleven Labs) - A Deep Flake Production.

#midjourney #runway #genai #deepflake #funnyvideo

Here's how I did it, step by step, using some of the most cutting-edge Gen AI tools available.

This project wasn't just about creating something funny (though it certainly was that). It was about testing the limits of what's possible with AI-assisted content creation and understanding how these tools could revolutionize industries from marketing to product design and beyond.

In this article, I'm going to take you through my entire process - from the initial spark of an idea to the final edited video. I'll share the exact prompts I used, the challenges I faced, and the solutions I found. Whether you're a marketer looking to create engaging content on a budget, a product designer wanting to rapidly prototype ideas, or a business leader curious about the potential of AI, I hope my experience will inspire you to explore these powerful tools for yourself.

So, let's dive in and see how I went from a vague notion to a fully realized video in just 24 hours, spending less than $30 along the way.

Conception and Ideation with Claude AI

My journey began with a simple desire: to create something that would make people laugh while showcasing the capabilities of AI. But where to start? That's where Anthtropic’s Claude AI came in.

I opened up my conversation with Claude and typed: "I want to create a funny video using AI tools. It should be something unexpected that plays on common experiences or pain points. Any ideas?"

Claude came back with several suggestions, but one caught my eye: a parody of Olympic events. This got me thinking about the intersection of the grand and the mundane, as well as the Silly Olympics of the aforementioned Pythons. Inspired by this, I pushed further:

"What if we combined the Olympics with a common household annoyance? Something everyone can relate to but seems absurd in an Olympic context."

Claude's response included several ideas, but one stood out: "How about a 'Barefoot LEGO Walking Race'? Athletes must traverse a track covered in LEGO bricks, mimicking the universal pain of stepping on LEGOs left on the floor."

I knew immediately this was the winner. It was absurd, relatable, and had great visual potential. To develop the details, I asked Claude: "Let's develop this 'Barefoot LEGO Walking Race' idea. How would it be presented as an Olympic event? What would the track look like? How would athletes prepare?"

Claude provided a wealth of details:

The track would be a standard 400m oval but covered in a rainbow of LEGO bricks.

Athletes would wear typical track uniforms but no shoes.

There would be "LEGO pits" instead of water jumps in steeplechase.

Coaches might be seen giving last-minute advice on LEGO avoidance techniques.

To add authenticity to the concept, I asked Claude to help me brainstorm commentary: "Generate some lines a British sports commentator might say during the Barefoot LEGO Walking Race."

Claude came up with gems like:

"And they're off! Look at Johnson hop-scotching through that minefield of 2x4 bricks!"

"Oh! Silva's hit a particularly pointy piece there. You can see the agony on his face as he limps towards the 200m mark."

"Incredible technique from Zhang, using the side of her foot to slide over those smooth 1x8 plates!"

Finally, I asked Claude about the practical aspects of creating this video: "What elements would I need to create to make this LEGO race video feel like authentic Olympic coverage?"

Claude suggested several key components:

A realistic starting line shot with athletes in starting blocks

Close-ups of grimacing faces and pained feet

Wide shots of the entire LEGO-covered track

Slow-motion replays of particularly dramatic LEGO encounters

On-screen graphics showing athlete names, times, and LEGO-related statistics

Throughout this ideation process, I was constantly amazed by Claude's ability to understand the concept and build upon it in creative ways. The AI didn't just regurgitate information - it genuinely helped me develop and refine my ideas, saving me hours of solo brainstorming.

Visual Creation with Midjourney

With my concept solidified thanks to Claude AI, it was time to bring the Barefoot LEGO Walking Race to life visually. For this, I turned to Midjourney, an AI image generation tool known for its ability to create highly detailed and often surreal images.

My first attempt at a Midjourney prompt was admittedly a bit of a mess. I tried to include every detail I could think of:

"/imagine a Photorealistic Olympic TV broadcast of a group of distance runners at the starting line of a race in an Olympic stadium. The runners are barefoot and waiting to start on a red running track, which is unexpectedly strewn with colorful small LEGO bricks, as if a toddler has dumped a giant bin of LEGO blocks across the lanes. The runners are in starting positions, wearing national uniforms with various bright colors. The background includes a grassy area, Olympic banners, and a cheering crowd. On-screen graphics with athlete names and countries, golden hour lighting, realistic sports photography, no illustrations, --q 2 --ar 16:9"

The result was... not quite what I was hoping for. The image was cluttered, the athletes looked more like LEGO figures than humans, and the track didn't really resemble a LEGO-strewn surface.

Realizing I needed help, I turned to the Midjourney Discord community. An experienced user named InfoGuru provided some invaluable advice:

Upload and use an initial source image to help provide a baseline of what you’re imagining.

Be more literal in descriptions (e.g., "legs and feet of athletes running toward viewer" instead of conceptual descriptions).

Use promptlets separated by double colons (::) to organize positive and negative elements.

Use negative weights to remove unwanted elements (e.g., "shoes, sneakers::-0.6" to avoid generating footwear).

Utilize image weights (--iw) and aspect ratios (--ar) for more control.

Armed with this knowledge, I uploaded a picture of a starting line grabbed from YouTube refined my prompt:

"/imagine a photograph of full-length barefoot grimacing male Olympic athletes wearing their national color jerseys and shorts, running on a track covered in thousands of tiny multi-color Lego blocks with the white track lines still visible::1 shoes, sneakers::-0.6 --iw 0.8 --ar 11:9"

This produced much better results, but the athletes still looked a bit LEGO-like themselves. I spent the next couple of hours tweaking and refining my prompt. Some key learnings:

Removing the word "LEGO" from parts of the prompt helped create more realistic human figures.

Using the Vary Region tool allowed me to redraw specific parts of the image, like faces, to make them more human-like.

Adding specific fabric descriptions (e.g., "satin tracksuits") helped steer the AI away from creating LEGO-like figures.

My final prompt looked something like this:

"/imagine a photograph of full-length barefoot grimacing male and female Olympic athletes wearing satin national color jerseys and shorts, running on a red track covered in thousands of tiny multi-color plastic building blocks, white track lines visible::1 shoes, sneakers, cartoons::-0.6 --iw 0.8 --ar 11:9"

With a working prompt, I generated several images to use in my video:

A starting line shot with athletes in starting blocks

A mid-race shot showing athletes grimacing as they run

A finish line shot with an athlete crossing in apparent pain and relief

For each of these, I had to slightly modify my base prompt to capture the specific scene. To make the images look more like freeze-frames from a broadcast, I added elements like on-screen graphics, a slight motion blur to imply movement, and the hint of a camera operator or boom mic at the edge of the frame.

Animating with RunwayML

With my collection of Midjourney-generated images in hand, it was time to add motion to the Barefoot LEGO Walking Race. For this, I turned to RunwayML, an AI-powered video generation tool.

First, I uploaded my Midjourney images to Runway. I had three key scenes: the starting line tension, mid-race grimacing and pain, and the triumphant (yet agonizing) finish.

My first attempt at a Runway prompt was quite detailed:

"A dynamic motion, slow-motion, full-length shot of barefoot, shoe-less, grimacing athletes running towards the camera. Running on a standard Olympic track covered in thousands of tiny multi-color Lego blocks, with white lane lines clearly visible through the Legos."

The result was interesting but not quite right. The athletes moved, but their feet kept materializing shoes, and the LEGO blocks on the track disappeared as the animation progressed. Clearly, I needed to refine my approach.

I reached out to the RunwayML community for advice and received a crucial tip: keep it simple. One user, Erik, suggested using a much shorter prompt:

"Five guys running towards the camera"

Surprisingly, this produced better results. The motion was smoother, and while it didn't capture all the details, it gave me a base to work with.

Building on this simpler approach, I experimented with various short prompts, each focusing on a specific aspect of the scene:

"Athletes running barefoot on a track"

"Grimacing runners moving towards the camera"

"Olympic race on a LEGO-covered track"

I found that running these simpler prompts multiple times and then combining the best parts of each output in the video editor yielded the best results.

One persistent issue was the AI's tendency to add shoes to the barefoot runners. To counter this, I added a specific instruction: "Maintain bare feet throughout the animation". This helped, although it wasn't perfect. I made a note to pay extra attention to the feet during the editing phase.

To really sell the illusion of an Olympic broadcast, I created separate animations for different stages of the race, different camera shots and a somewhat linear (although abbreviated) narrative leading to a punchline of a foot massage at the end.

Adding Voice with ElevenLabs

To bring my Barefoot LEGO Walking Race to life, I needed the perfect British sports commentator voice. Enter ElevenLabs, an AI voice generation tool.

After experimenting with various options, I settled on the "Brian" voice in ElevenLabs. It had just the right mix of authority and excitement that I was looking for in a sports commentator.

Using the commentary ideas generated earlier with Claude AI, I wrote a short script for the video. It included lines like:

"Welcome to the inaugural Barefoot LEGO Walking Race! The athletes are at the starting line, each hoping their feet are calloused enough for this grueling event."

"Oh! Johnson from the USA has hit a particularly pointy brick! You can see the agony on his face as he hops towards the 200-meter mark."

I input my script into ElevenLabs, adjusting the settings for tone and pacing to match the excitement of a sports broadcast. The result was surprisingly realistic, capturing the cadence and energy of a real commentator.

Some words, particularly athlete names and "LEGO," needed a bit of tweaking in their pronunciation. I experimented with different spellings (like "Laygo" for LEGO) to get the AI to say them correctly.

Editing and Final Assembly

With all my elements created, it was time to bring everything together. I used RunwayML's built-in video editor for this final phase.

I started by arranging my animated clips in order: starting line, mid-race, and finish line. I trimmed and adjusted each clip to create a smooth flow.

I imported the ElevenLabs-generated commentary and carefully synced it with the video, ensuring that the comments matched the on-screen action.

After a final review and a few minor tweaks, I exported the video. The entire editing process took about 2-3 hours.

The Result

What started as a crazy idea had become a reality: a minute-long video clip that looked remarkably like authentic Olympic coverage of the world's most painful race. From the starting pistol to the grimacing athletes to the excited commentary, every element came together to create a somewhat amusing yet believably realistic piece of content. As of today, I’ve had over 2000 views from a single post on TikTok. My career in influencing has clearly taken off :)

Total time spent: Approximately 15-20 hours (including learning time)

Total cost: Less than $30 in subscription fees to various AI tools

Why should I care?

This project was more than just a fun exercise in content creation. It demonstrated the incredible potential of AI tools in creative processes. In less than a day, and with a budget that wouldn't even cover a professional camera rental, I was able to produce a video that would have previously required a full production team, actors, and expensive equipment.

The implications for businesses are enormous. Here are just a few potential applications:

Rapid Prototyping: Product designers could use these tools to quickly visualize and iterate on ideas, saving time and resources in the early stages of development.

Personalized Marketing: Companies could create customized content for different audience segments at scale without the need for multiple expensive video shoots.

Training and Education: Businesses could produce high-quality instructional videos or simulations quickly and cost-effectively.

Event Promotion: Event organizers could create enticing previews or recaps of their events without the need for on-site video crews.

Real Estate and Tourism: Virtual tours and property showcases could be created or enhanced using these AI tools.

Concept Testing: Marketers could rapidly produce different versions of an ad or campaign to test with focus groups before committing to full production.

Of course, there are considerations. These tools are powerful but not perfect. They require human creativity, problem-solving, and oversight to produce truly compelling results. As these technologies evolve, we'll need to grapple with questions of originality, copyright, and the changing nature of creative work. The state of the art is impressive but not (yet) perfect. Even in the week since I made the video, there have been some incredible improvements in clarity and realism with new tools like “Flux” and Kling from China. We all still eagerly await to see how these compare to the much anticipated SORA from OpenAI, as I’ve previously written about.

But one thing is clear: AI-assisted content creation is not a future possibility - it's a present reality. And for businesses willing to embrace and experiment with these tools, the potential for innovation, efficiency, and engaging content creation is boundless.

So why not give it a try? Your next viral marketing campaign, product visualization, or creative breakthrough might be just a prompt away. And who knows? Maybe you'll create the next great Olympic event to rival Break Dancing. . . assuming that wasn’t AI. . .

You had me at legos!

Brilliant!